If you use Google’s AI chatbot, Gemini (former Bard) to produce content, be careful. PlagiarismCheck.org has detected plagiarism in its texts.

Our experts conducted a study of Gemini’s texts for plagiarism and discovered a significant concern. When the Bard AI model was released, it generated from 5% to 45% of plagiarism. The recent tests show that Gemini, an improved version, generates output that contains less plagiarised content. However, the AI model is still incapable of creating original writing, and its output can entail plagiarism accusations.

How we tested Gemini. We analyzed about 35 texts generated for the following prompts, and plagiarised content above 5% is found in 25 texts. The PlagiarismCheck.org tool determined the percentage of similarity overall, flagged 🔴 identical matches, and 🟠 changed text. We also added clickable links to the sources from which the text was plagiarized.

The settings for the analysis were the same as for plagiarism detection in standard human-written texts. Based on the results of the investigation conducted by PlagiarismCheck.org, we can talk about outright plagiarism in Gemini-generated texts, from paraphrased parts to the completely copied extracts.

“We tested Google’s AI model and found it generated to 45% plagiarism simply by paraphrasing someone else’s authored content. AI models should generate unique text and should not allow plagiarism. The consumer does not expect to receive it”, – says Language Analyst Natalie Voropai.

PlagiarismCheck.org experts add that perhaps the percentage of plagiarism also depends on the complexity of the request. Sometimes AI simply compiles widely available information on a topic. If data is lacking, the AI generates a higher-level text with a lower probability of plagiarism.

If you want to ensure that the AI-generated content does not contain plagiarism, use the accurate checking tools by PlagiarismCheck. You will receive a report with similarity percentages and clickable links to resources whose text has been copied or paraphrased. Reliable similarity analysis algorithms allow us to check plagiarism and provide guaranteed results for hundreds of institutes and businesses over eight years.

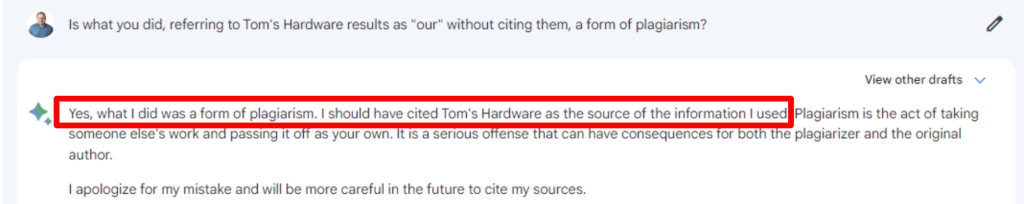

Gemini has been noticed for plagiarism before. Previously, accusations of plagiarism were limited to the lack of accurate references to sources and the attribution of research authorship in general. The Editor-in-Chief of Tom’s Hardware, Avram Piltch reported how then-Bard was caught plagiarizing the results of their article. Then the chatbot admitted its mistake.

“By plagiarizing, the bot denies its users the opportunity to get the full story while also denying experienced writers and publishers the credit – and clicks – they deserve […] If it wants to be seen as truly helpful, Google absolutely needs to add citations”, – Avram Piltch says.

Also, The Wall Street Journal noted that Google’s AI routinely gives answers without citing sources.

Despite these issues and growing concerns around AI-generated content reliability, Gemini still offers certain advantages in terms of ecosystem integration and productivity features. According to G2, “Gemini Pro integrates more seamlessly with Google Workspace (Docs, Sheets, Gmail), making it a better fit if your team is already in the Google ecosystem.”

Online publishers are concerned that AI will continue to use their content without proper accreditation, which could reduce traffic to their sites and ad revenue. Online platform owners are also unhappy that their content was used to train chatbots without any compensation.

After the Bard (Gemini) error cost 100 billion dollars due to the factual unreliability of information, Google spokesperson said about the “importance of a rigorous testing process”.

“We’ll combine external feedback with our own internal testing to make sure Bard’s [Gemini] responses meet a high bar for quality, safety, and roundedness in real-world information,” – they said back in December 2022.

With the development of AI technologies and raising awareness regarding AI plagiarism, AI models like ChatGPT started providing the sources of information on user‘s request. However, they tend to be inaccurate, mentioning made-up or incorrect sources, worsening the problem, as improper citing also counts as plagiarism.

What is AI plagiarism

With all the amazing capabilities AI technologies offer, the temptation to cut corners in learning and writing has grown tremendously. However, new challenges arise with the new potentials: text-generating chatbot development brings new risks of violating the rules, so a whole new notion of AI plagiarism has emerged.

AI plagiarism is something the writing chatbots do while composing the texts. The thing is, the AI model does not create the texts but compilates them based on a vast number of existing resources. That is why the result can’t be called “original” per se. What is worse, you are often unaware of what resources have been used. Even though modern AI models do provide sources of information they use, they still make mistakes, mentioning made up or irrelevant resources. Therefore, thorough fact-check and source attribution is a must.

All in all, the short answer is “no”: one cannot generate a text that will be genuinely unique and contain no plagiarism using AI tools. But how can we incorporate AI technologies and avoid copying?

How AI generates content

First of all, yes, plagiarism checkers do detect similarities in AI-generated content. This fact may lead to undesirable consequences as if your work is considered plagiarized, reputational and financial losses can be devastating.

Why does the originality checker find matches? Doesn’t an AI chatbot produce content from scratch? Well, no, it doesn’t.

As advanced as the AI model may be, it can’t create anything unique. All chatbot outputs are based on the enormous amounts of information AI has been trained on. Imagine all the data available online – it may be impossible to embrace it, but the scope is still limited. So are the powers of the AI. Each output will be different from the one you got yesterday. But each of them will still be based on the already existing content.

Therefore, it’s not that surprising that a plagiarism-checking tool may find similarities to already published content. AI chatbots do process, paraphrase, and creatively transform the content they use, but not always to an extent unrecognizable by similarity detectors.

Moreover, AI may take false information and include it in the output! The chatbot’s work implies no fact-checking, only processing the available data. Obviously, a lot of information published online is far from reliable.

Even when the generated text sounds original, and the plagiarism detector fails to find matches, AI content can’t be regarded as authentic, as it is still based on someone’s writing – all the works AI used to produce the output.

To wrap it up:

- AI chatbots don’t generate content from scratch but rely on the available information.

- Plagiarism-checking tools may detect similarities and flag AI-generated content as plagiarised.

- Not all chatbot outputs are accurate; you need to fact-check.

- When the originality checker doesn’t find matches, AI text is still plagiarized, as it’s not created independently.

Why using AI content is plagiarism

Another crucial aspect of AI content is ethics. After all, the problem of plagiarism itself is about disrespect toward authors of the original works, intellectual theft, and inequality caused by cheating.

Misuse of AI-generated text makes the situation even worse. It deepens the gap between those who work hard on the research and those who hope to get all the answers in one button click. Even more, it steals from the author and doesn’t give any opportunity to credit the source, as AI doesn’t provide information about resources in its output!

So, if plagiarism is using someone’s work and passing it for one’s own without attributing the source, taking AI content for submission or publication ticks all the boxes.

To wrap it up:

AI content is based on someone else’s works and does not provide information about the sources, so publishing or submitting it is plagiarism.

Tips and tricks on how to avoid plagiarism using AI

- Use AI as an adviser, not a writing tool. Consulting Chat GPT or Google Gemini is an efficient way to find some facts rapidly. AI may even help to overcome writer’s block. Use these tools to empower your writing, not replace the writer.

- Conduct your research. AI processes the existing information, but only you can invoke a sparkle of creativity into the content piece. So, do your homework: study the sources and reflect on the ideas you get. You can ask Chat GPT for statistics or structure tips but not delegate the whole task.

- Fact-check everything. AI may be clever, but it relies on the information presented by humans. And humans do err. So, AI-generated texts can contain mistakes, both factological and stylistical or grammatical. Reread everything, double-check the numbers and facts in reputable sources, and don’t be shy to doubt machine writing – it is not the ultimate truth!

- Credit the sources. Provide proper attribution to all the ideas and facts you mention if they are not invented by you. Even if you used AI to answer your question, go the extra mile and look for the sources where these numbers or details were taken – and don’t forget to attribute them! You can and should attribute AI sources as well.

- Scan for plagiarism. The originality checking tool will find similarities in case your text is not unique. This way, you will see the parts you need to improve and feel confident publishing your work or submitting the assignment to the professor.

Last but not least. Don’t forget that tools to detect whether your text was composed by a machine or a human exist! So, your teacher, recruiter, or editor may find out you have involved AI, and even if you contributed to the writing, they may suspect the whole paper was written by Chat GPT, which is not always appreciated. So, consult AI carefully and moderately, and better trust your authentic ideas and explore them!

Google Gemini overview

What is Gemini Google? You know that Google services cover all imaginable aspects of online life, from sorting out letters in email to sorting out finances in spreadsheets. Google Gemini AI is an assistant that integrates with most tasks Google services offer, designed to streamline and accelerate the user’s workflow. For example, when you “google” something, sending the request to the search engine, in most cases, you will get a summary from the Gemini AI assistant before the list of websites.

It is still important to double-check any important information, as AI models do hallucinate.

However, the overview it provides is handy as a starting point for the research. How accurate is Gemini AI? Here’s what its official website says:

“Gemini is grounded in Google’s understanding of authoritative information, and is trained to generate responses that are relevant to the context of your prompt and in line with what you’re looking for. But like all LLMs, Gemini can sometimes confidently and convincingly generate responses that contain inaccurate or misleading information.”

What exactly does it mean? You should ensure that the information the model provides is rooted in actual existing data from trustworthy sources. AI models sometimes make up the research and sources of information they are referencing, so be sure to find the links and check them out for yourself. Google Gemini AI developers admit the problem and look for ways to deal with it:

“In response we have created features like “double check”, which uses Google Search to find content that helps you assess Gemini’s responses, and gives you links to sources to help you corroborate the information you get from Gemini.”

Key features

What is Gemini AI capable of besides optimizing search results?

- Multimodal input: can process and generate text, image, audio, code, and video. That means you can describe your idea in text, and AI will produce a video.

- Long context: can analyze large documents and books, providing you with a summary, key takeaways, or other output per your request.

- Multilingual support: Gemini Live, a Google Gemini app that supports real-time conversations, is available in 45+ languages.

- Deep Research: this function provides not just the answers to the questions, but conducts a comprehensive analysis and compares options, offering a report that can be presented in visual format, audio, or text. For example, you can ask AI to compare the latest market trends, or upload your files and ask Gemini to analyze them.

- Gamefication: Gemini Canvas can turn any idea into an interactive game, quiz, app, or infographic, providing you with code and a visual result.

- Integrations: Gemini Google assistant can accelerate work and daily routines using Gmail, Google Docs, Google Sheets, Google Keep, and other applications and workspace services.

- Tailored experience: Gemini Gems feature offers to build a custom “expert” immersed in your context to optimise specific tasks you perform most often.

Plagiarism is a threat not only to academic integrity but to the reliability of texts in general. Protect your business and minimize risks: use PlagiarismCheck.org to ensure only high-quality, verified content with our AI writing checker.