3 main concerns of OpenAI’s text classifier

A new round of humans against the machines is here: OpenAI, the company behind ChatGPT, has a text classifier, aimed at determining human- and AI-written content. This was their reaction to educators’ discussions about ChatGPT and its possible impact on academic integrity. Does it really work? There are several concerns to consider.

#1. Low accuracy

And by low, we mean “Can’t be trusted. At this stage, at least.” The developers themselves admit the tool can detect correctly only 26% of the text, composed by AI. This makes the classifier fail to detect artificial content in 74% of cases. At the same time, it “succeeds” at labeling 9% of texts, prepared by real people, as machine-generated. Here’s one of the examples of how the tool misses identifying an AI-written text: OpenAI AI Text Classifier – A GPT finetuned model to detect ChatGPT and AI Plagiarism.

One of the reasons for the high number of false positives and negatives can be the dataset behind the technology, as the classifier was trained only on the texts of the same topic, created by AI and people.

#2. Making student’s work available to the public

To make large language models like GPT-3 smarter and more precise, you need to “feed” them more content. OpenAI in general and its products in particular use the datasets from public access. Thus, when you submit your student’s paper to their classifier, it can be added to that dataset. From that moment, the assignment goes live and becomes potentially available to thousands of other users whose prompts will be specific enough. Next time some other student asks ChatGPT to generate an essay with a similar title or instructions, they can get a paraphrased or even an exact match of the paper you’ve submitted.

#3. Lightning-fast learning

Of AI, not people, unfortunately. The main concern here is that GPT-3 and other AI models are learning from each prompt. The more people interact with them and correct the outputs, the better results they deliver next time. Same story with your students’ papers: getting more inputs, ChatGPT can produce more human-like assignments. As a result, it may be harder to spot the text’s origin. The antidot to this is developing AI detecting software alond with AI technology evolvement.

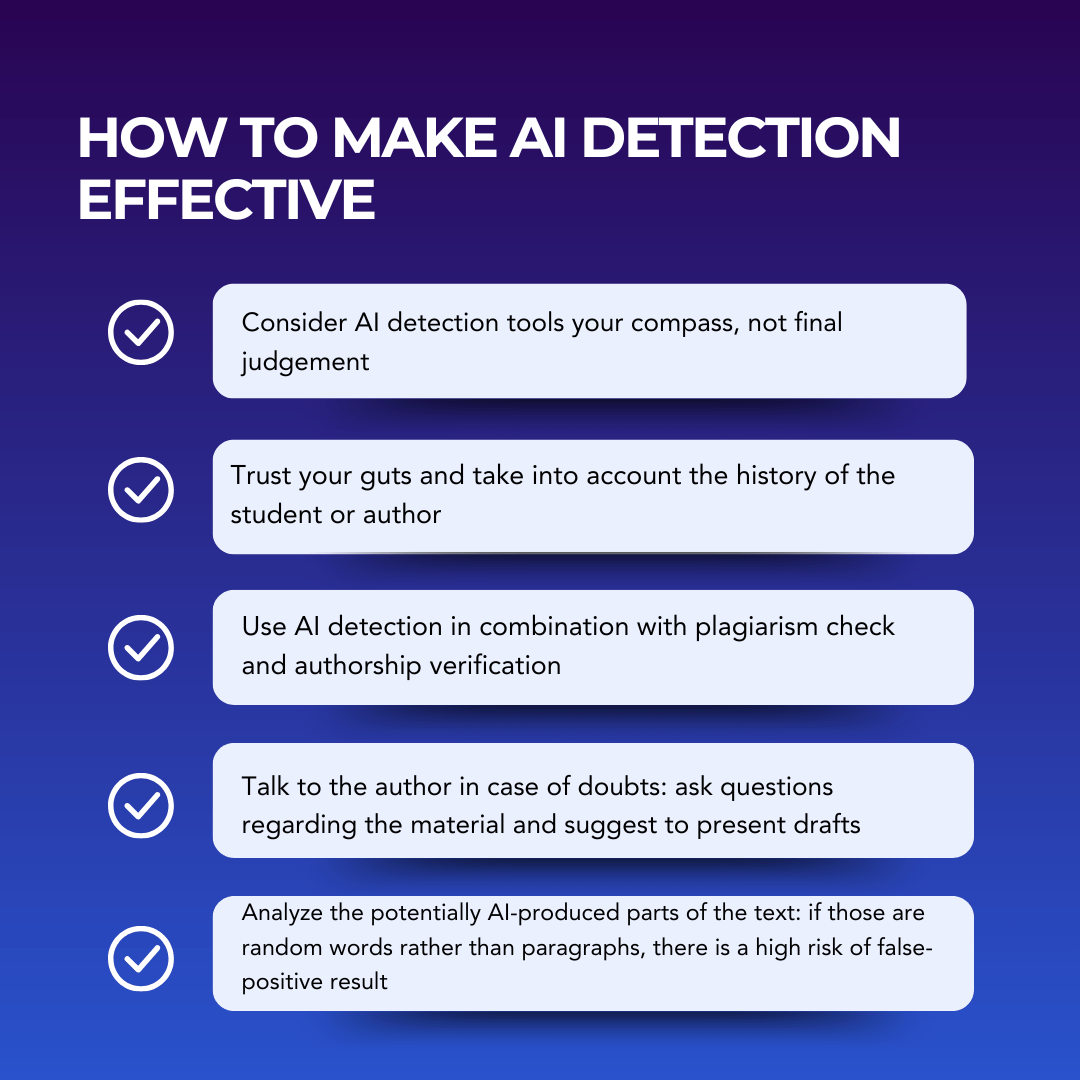

Any solution to that?

Modern market offers multiple tools to provide accurate AI detection. TraceGPT by PlagiarismCheck.org is one of them. It provides:

- 97% accuracy;

- security: the submitted content is never published elsewhere or used to train AI model;

- constant updates and improvements;

- downloadable report where potentially AI content is highlighted.

Why is it essential to distinguish AI-generated content from human-generated text? How can AI content detectors help businesses avoid risks and unlock their full potential? Let’s explore some of the key benefits.

1. Distinguishing AI-Generated Content increases trust

Readers, customers, and stakeholders can be guaranteed to know who created the content: an AI model or a specific author. This allows businesses to ensure reliability and authenticity in their written materials.

2. Ensuring Originality and Uniqueness

Is originality vital to you? Guarantee the uniqueness of the content and style of your content. Something genuinely innovative, currently only the human brain can create, artificial intelligence – only repeats existing patterns and available information. Provide truly innovative ideas and unconventional visions. Deliver fresh and distinctive materials.

3. Mitigating Reputational Risks and Maintaining Credibility

Avoid the reputational risks of publishing or promoting unlabeled and potentially unreliable AI-generated content. A clear definition of AI-generated text ensures transparency and adherence to leading ethical practices. In addition, avoid risks due to possible misinformation, fake sources, or plagiarism, which are possible when using some AI models.

Maintain High Editorial Standards, audience trust, and brand reputation.

4. Streamlining Content Moderation

AI text detectors assist content platforms and media streamline their content moderation processes. These detectors can quickly identify AI-generated spam, fake product reviews, or low-quality content, allowing moderators to review and remove such materials efficiently. It helps maintain the integrity and trustworthiness of the platform or publication.

5. Unlocking AI’s Full Potential

By using AI content detectors, businesses can leverage the full potential of AI technologies while mitigating associated risks. These detectors enable organizations to embrace AI-generated content responsibly and transparently. They can unlock new possibilities for content creation, automation, and innovation while maintaining control and oversight over the content generated by AI models.

To

Moreover, to deliver accurate results, the detector should recognize the latest GPT models, like Sora, which are able to generate not only text.

What is Sora ChatGPT?

Let’s start the Sora ChatGPT onboarding with fundamentals. Sora is a system that can understand and generate content across different types of media.

Without going into too much technical detail (because you don’t need to know all the algorithms the system relies on to use it effectively), Sora can translate abstract concepts into visual content and ensure that objects, characters, and scenes remain coherent as they change throughout the video.

Key points so far

So, what is Sora in ChatGPT’s ecosystem? Here are the three points you need to remember for now:

- What you provide: Text descriptions or image references.

- What you get: High-resolution video (typically 1 minute).

- The tool’s capabilities: Realistic motion, complex physics, and visual consistency.

To answer your question on how to use Sora ChatGPT when you already have images you want to turn into videos, it’s possible to do so by adding motion or effects, as well as by extending the scene beyond its original frame. You can also modify existing videos by changing styles or completely reimagining scenes.

Thanks to the language expertise of the OpenAI Sora text-to-video model, it can understand nuance and intent in prompts, which means that you can add layered instructions and indirect references when working on visual content creation. It’s one of the key functions that distinguishes Sora from simpler prompt-based tools.

How you can use it

Although Sora is still in limited access as of now, you can start using it if you have an active subscription to one of these premium OpenAI services:

- ChatGPT Plus. The standard premium subscription provides access to GPT-4 and priority access to new features, including ChatGPT Sora.

- ChatGPT Pro. This is a higher-tier subscription with enhanced capabilities and higher usage limits.

Now, it’s time for you to find out how to create videos much faster than you did before.

- Sora turns text (and images) into video. Now, every content creator can get access to visual storytelling without spending a fortune on the necessary tools.

- Detailed prompts lead to better results. Use the chance to think like a director when you describe your scene to Sora.

- Sora understands context, tone, and structure, which is much like ChatGPT, but video-based rather than text-based.

- You should use it responsibly to stay mindful of ethical concerns around realism, privacy, and content use.

- Sora is still evolving, so you can expect improvements, new features, and wider access in the future.