Effective, accurate, and fun. It is the working process and the result most of us strive for, and AI can assist with some parts, streamlining the writing flow and helping to come up with fresh ideas easily. In other aspects, however, chatbot output can become a pitfall. In particular, AI can be an unreliable ally when it comes to trustworthy information and original content. However, we are far from calling to ban chatbot usage in education and creative industries–what we need is to learn how to collaborate with AI ethically.

Hence, tip number one if you work with information will be…

Always check for AI presence

Not only that university professors fairly consider AI-generated papers as cheating. Search engine systems lower chatbot-produced content in ranking, hindering the website’s visibility and promotion; moreover, AI writing style is often monotonous and hard to read. The most serious concerns, however, are caused by two facts about AI content:

- it is not original;

- it is not always true.

Trained on the enormous amounts of already existing data, AI can plagiarize, getting the author into originality-related trouble even when AI usage is acceptable. Hence, recruiting a plagiarism detector is a must whenever you implement AI assistance into your writing process.

Another precaution one should take when an AI text detector shows that content might be chatbot-generated is fact-checking.

Why fact-checking is crucial for AI content

In the world of fast-food content and fake news, fact-checking is essential for any type of data. What is so special about AI output?

- Many tend to take it for the ultimate truth, while AI writing can contain mistakes.

- Meanwhile, AI models occasionally hallucinate, making up facts and producing information that is not entirely true.

The thing is, the main chatbot’s priority is to provide you with the answer to your prompt. So, AI does its best, using the information it has been trained at, to give you at least something. This “something”, though, can be generated by the chatbot and not exist in reality. Remember that AI-produced pictures of people with seven fingers? Similar distortions can happen to text data.

How to fact-check

To remain trustworthy and stop multiplying wrong beliefs, one should ensure that the information they provide and publish is the real deal. The easiest way when working with AI content is to ask AI for the sources. Sometimes the model can name specific books or articles; other times it refers to general knowledge and offers to provide links to additional reading on the subject. In any case, you can take it from there and study the materials to ensure the information is true.

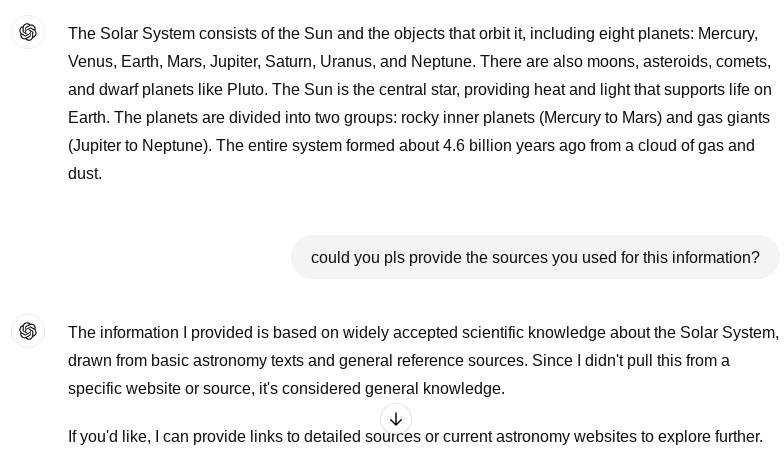

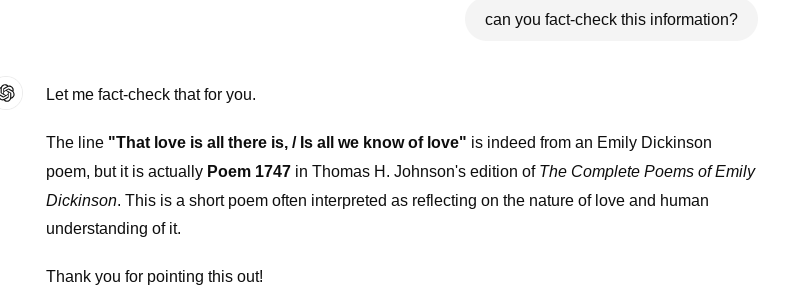

If we ask Chat GPT itself to double-check its output, the answer can be surprising:

Obviously, in this case, only human judgment and data comparison can help to come up with the right answer. Some of the steps to fact-check the information are:

- Work with the sources. Ensure they are trustworthy and not AI-generated; check the publication date to guarantee the data is relevant; look at the reference list and research the authors’/outlets’ credibility.

- Compare the facts. This is what fact-check is about. You can simply google the information to ensure some high-quality sources provide the same names and numbers. The best way is to go to the original source, where the fact or conception has been introduced for the first time.

- Use the fact-checking tools. Google Fact Check Tools and PolitiFact are among the most trustworthy instruments. Nowadays you can find a range of AI-empowered verification tools that accelerate the fact-checking process.

Whenever you need to craft an original, trustworthy, and engaging text, PlagiarismCheck.org is here for you. Ensure your content sounds natural and won’t entail plagiarism-related problems, and streamline your writing process with our innovative tools! Try it now.